NVIDIA Grace Hopper Systems Gather at GTC

Mar 04, 2024

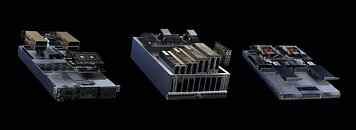

MGX Speeds Time to Market

NVIDIA MGX is a blueprint for building accelerated servers with any combination of GPUs, CPUs and data processing units (DPUs) for a wide range of AI, high performance computing and NVIDIA Omniverse applications. It's a modular reference architecture for use across multiple product generations and workloads. GTC attendees can get an up-close look at MGX models tailored for enterprise, cloud and telco-edge uses, such as generative AI inference, recommenders and data analytics. The pavilion will showcase accelerated systems packing single and dual GH200 Superchips in 1U and 2U chassis, linked via NVIDIA BlueField-3 DPUs and NVIDIA Quantum-2 400 Gb/s InfiniBand networks over LinkX cables and transceivers. The systems support industry standards for 19- and 21-inch rack enclosures, and many provide E1.S bays for nonvolatile storage.

Grace Hopper in the Spotlight

Here's a sampler of MGX systems now available:

- ASRock RACK's MECAI, measuring 450 x 445 x 87mm, accelerates AI and 5G services in constrained spaces at the edge of telco networks.

- ASUS's MGX server, the ESC NM2N-E1, slides into a rack that holds up to 32 GH200 processors and supports air- and water-cooled nodes.

- Foxconn provides a suite of MGX systems, including a 4U model that accommodates up to eight NVIDIA H100 NVL PCIe Tensor Core GPUs.

- GIGABYTE's XH23-VG0-MGX can accommodate plenty of storage in its six 2.5-inch Gen5 NVMe hot-swappable bays and two M.2 slots.

- Inventec's systems can slot into 19- and 21-inch racks and use three different implementations of liquid cooling.

- Lenovo supplies a range of 1U, 2U and 4U MGX servers, including models that support direct liquid cooling.

- Pegatron's air-cooled AS201-1N0 server packs a BlueField-3 DPU for software-defined, hardware-accelerated networking.

- QCT can stack 16 of its QuantaGrid D74S-IU systems, each with two GH200 Superchips, into a single QCT QoolRack.

- Supermicro's ARS-111GL-NHR with nine hot-swappable fans is part of a portfolio of air- and liquid-cooled GH200 and NVIDIA Grace CPU systems.

- Wiwynn's SV7200H, a 1U dual GH200 system, supports a BlueField-3 DPU and a liquid-cooling subsystem that can be remotely managed.

- Wistron's MGX servers are 4U GPU systems for AI inference and mixed workloads, supporting up to eight accelerators in one system.

The new servers are in addition to three accelerated systems using MGX announced at COMPUTEX last May - Supermicro's ARS-221GL-NR using the Grace CPU and QCT's QuantaGrid S74G-2U and S74GM-2U powered by the GH200.

Grace Hopper Packs Two in One

System builders are adopting the hybrid processor because it packs a punch.

GH200 Superchips combine a high-performance, power-efficient Grace CPU with a muscular NVIDIA H100 GPU. They share hundreds of gigabytes of memory over a fast NVIDIA NVLink-C2C interconnect.

The result is a processor and memory complex well-suited to take on today's most demanding jobs, such as running large language models. They have the memory and speed needed to link generative AI models to data sources that can improve their accuracy using retrieval-augmented generation, aka RAG.

Recommenders Run 4x Faster

In addition, the GH200 Superchip delivers greater efficiency and up to 4x more performance than using the H100 GPU with traditional CPUs for tasks like making recommendations for online shopping or media streaming.

In its debut on the MLPerf industry benchmarks last November, GH200 systems ran all data center inference tests, extending the already leading performance of H100 GPUs.

In all these ways, GH200 systems are taking to new heights a computing revolution their namesake helped start on the first mainframe computers more than seven decades ago.

View at TechPowerUp Main Site | Source